The Bangification of AI

Or why it’s so creepy to be a woman on the internet in 2025

In 2022, while critiquing the ever-worsening consumer experience of shopping on Amazon, Cory Doctorow coined the term “enshittification.” Amazon wasn’t the only tech platform that was enshittified; nearly all of the technology services that we’ve interwoven with our “real” lives have become increasingly unusable. Facebook? Full of AI slop. TikTok? Ad after ad on full-volume autoplay. As the back cover copy on Doctorow’s new book recounts:

The once-glorious internet was colonized by platforms that made all-but-magical promises to their users―and, at least initially, seemed to deliver on them. But once users were locked in, the platforms turned on them to make their business customers happy. Then the platforms turned to abusing their business customers to claw back all the value for themselves. In the end, the platforms die.

RIP Myspace and Tumblr, we knew you when. Doctorow’s concept resonated so strongly with people all over the world that the American Dialect Society made “enshittification” their word of the year in 2023, followed by Australia’s Macquarie Dictionary in 2024.

Things are steadily—and undoubtedly—getting worse for everyone online. But as a woman, I’ve felt a special sort of ick these past few weeks. It’s not just dismay at the capitalistic drive for profit above all else; it is the nausea-inducing realization that as new tech is developed, the objectification of women is seen as a necessary—or in some cases, even desirable—outcome.

Generative artificial intelligence’s race to the bottom has continued apace, and with each new development in the sector, women seem to be the casualties. Rather than putting as many resources as possible to solving the world’s biggest problems, as we’ve been promised AI will do, the technologists of the world are asking simply: how can I put my dick in this?

Welcome, ladies and gentlemen, to the bangification of AI. You’ve been here for years, you just didn’t know it.

In 2019, the vast majority of deepfake content online depicted women in sexual situations they neither consented to nor participated in. Six years later, social media companies are not doing enough to crack down on apps that deliver that content, which has become even more accessible; as The American Sunlight Project and Indicator found in September 2025, Meta is turning a blind eye to the influx of apps that advertise services to digitally remove a woman or girl’s clothing. And in the expanding metaverse of virtual reality, women are getting meta-raped, which Clare McGlynn and Carlotta Rigotti describe as “non-consensual touching, image-based sexual abuses and novel forms of gendered harm, often trivialised and inadequately addressed by current laws.”

Some might argue that these examples are primarily instances of user-generated content, just a few (hundred thousand) bad apples in a universe of upstanding users, but the numbers don’t really matter here. When you’ve been depicted in nonconsensual deepfake sexual abuse, as I have, it doesn’t matter if one or one thousand people were involved. The content is still abusive, and it is shown to have psychological impacts similar to “in-person” sexual harassment.

But it gets worse: tech platforms are catering to the instinct to bang. Particularly in the AI world, it seems companies are bangifying their platforms as a first order of business. In October, we’ve seen at least three major instances of bangification as these companies seek to out compete one another in their steady race to the bottom.

Take Sora, OpenAI’s new generative video-making social app. (If you’re not in the know, imagine if TikTok had a baby with the most hyper-realistic video generator you’ve ever seen; that’s Sora.) They’ve put some guardrails on what users can do: you can’t make nude content or explicitly sexual content. But as Business Insider’s Katie Notopoulos found, “niche fetish videos” are taking over the app. Men are making images of Notopoulos and other women on the app with large, pregnant bellies for their “personal sexual gratification.” And they’re not stopping there. “An adult man...made about 20 videos with variations of a prompt asking for a preteen girl in a grass skirt, dancing.” Other men—and underage boys—are editing themselves into videos with an adult porn star. As Notopoulous writes, “There’s hardly any women on [Sora], and it’s no wonder why. Women innately understand the risk of letting anyone make videos with their faces — the likelihood of something being creepy is extremely high.”

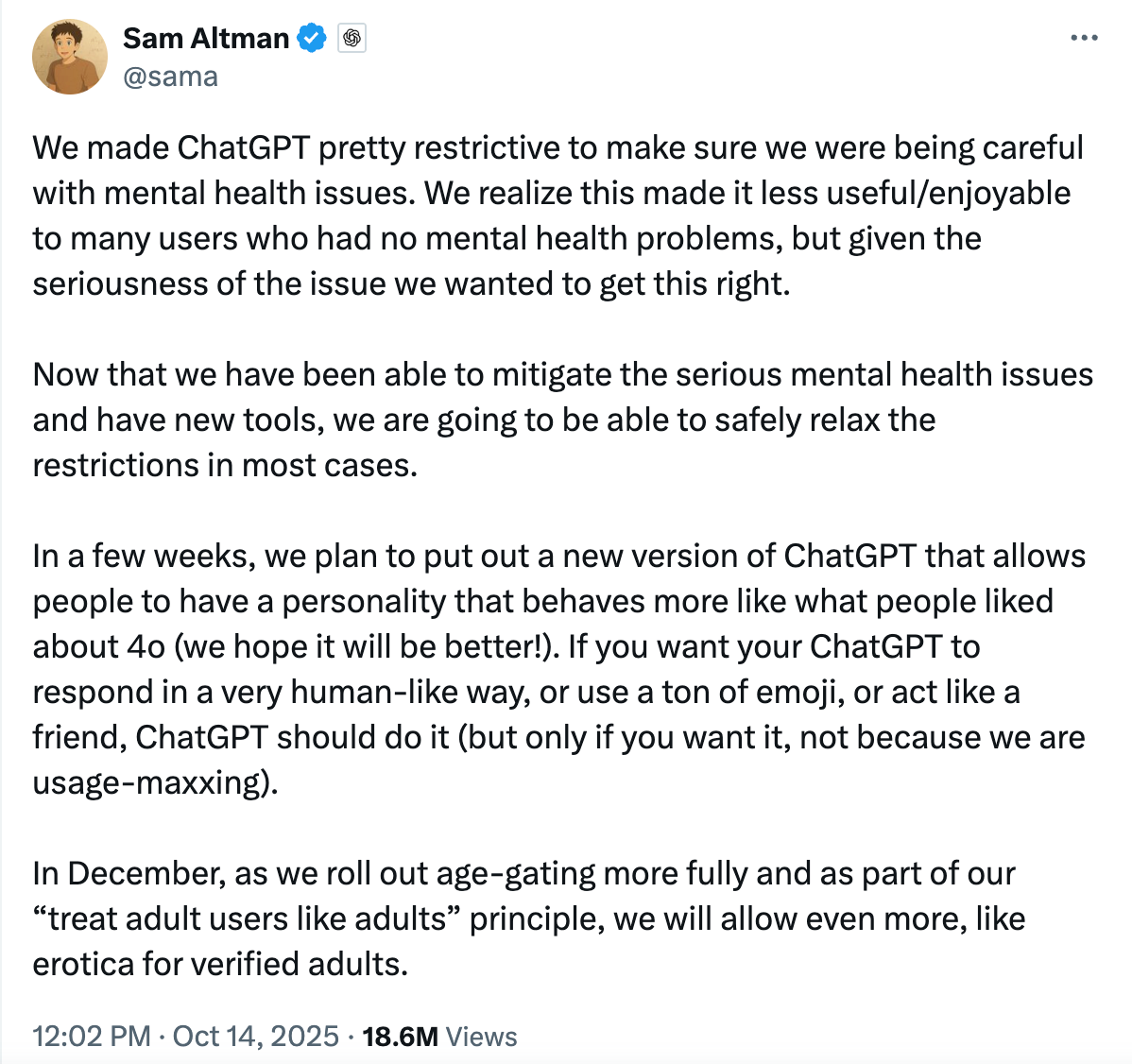

And then there are OpenAI CEO’s Sam Altman’s pronouncements about the evolution of ChatGPT, now bangified:

Congrats, Sam! You’re not going to “usage-maxx” for a friendlier chatbot, but you are advertising the equivalent of a ChatGPT blowup doll to the world. Your moral fiber is made of truly stern stuff.1

Amid a reported increase in male loneliness and a concerning rise in misogyny, ChatGPT—like Elon Musk’s xAI before it—is clearly responding to demand. (I just dry heaved, did you?) Don’t worry, Altman tells us, the service will be “age-gated,” that is, underage users won’t be able to access it. Let’s be clear, though: even as an adults-only service, unstable men will access these chatbots. After many dozens of hours of chatting with a pliant, submissive AI “girlfriend,” they will be dismayed when women in real life do not treat them with the same deference.

Perhaps that’s where Tyler Cowen—noted economist and Professor at George Mason University—was coming from when he wrote about Tilly Norwood, the “AI actress” who drew condemnation from major human actors. Cowen didn’t condemn Norwood’s creation—quite the opposite. He wrote, “if you wish to see a virgin on-screen, this is one of your better chances.”

How about “if you wish to see a virgin on-screen, seek psychological help?”2

I have heard serious men in serious places argue that the creation of deepfake sexual abuse material depicting nonexistant women and girls is a societal good; they argue it can be used by deviants and pedophiles so they don’t commit harm “in real life.” But whether we’re mitigating harm from loneliness or sex predators or cranky preschoolers who “want TV now!,” giving an inch doesn’t change the ultimate outcome: each of these populations will eventually want a mile.

There are completely legitimate, and perhaps even positive areas for sex and AI to mix, with appropriate safeguards. But this field’s safeguarding record, from mental health to data privacy, is abysmal. All available evidence points to this tool being another frontier where men are radicalized to offline misogyny. So far, generativeAI’s most visible contribution to the world has been used to humiliate and abuse women. And bangification is broader than just AI; the internet, by and large, not only harasses and endangers women, it’s built to incentivize such behavior. Social platforms were primarily built by and for white, straight men. And let’s not forget that the porn industry, which these sexualized AI tools are “learning” from, runs on the subjugation, objectification, and degradation of women.

I’d like AI to do my laundry. I’d like it to plan my meals and book my business travel. But right now, the best use case for AI in my “real life” is combing through and categorizing large datasets of abusive content that I’d rather not expose myself to—and some of that data is AI generated itself.

AI’s champions have promised us so much. Maybe they believe bangification is a necessary evil to fulfill those promises. Or maybe they are just forever teenage boys, distracted from building a flying car by trying to violate the tailpipe.

Massive thanks to:

my dear friend (and receiver of my most unhinged voice notes) Emily Horne of Spin Class, who encouraged me to write about this phenomenon and name it 🙂 You won’t regret subscribing to her;

Susanna Gibson of MyOwnImage, a leading advocate for ending image-based sexual abuse, who helped me connect the dots on some citations.

Especially if you are a man who, with a few months of facial hair growth, could credibly play Santa Claus, and if you happen to teach students the apparent age of the AI avatar after whom you are lusting...

THIS. Also are we still doing Roman Empire? Bc I think about Tyler’s creepy AI virgin comments at least weekly and shudder every time.

Thanks for writing this, it clarifies a lot; I wonder if there's an architectural pattern for ethical AI we're missin, you're so sharp.